|

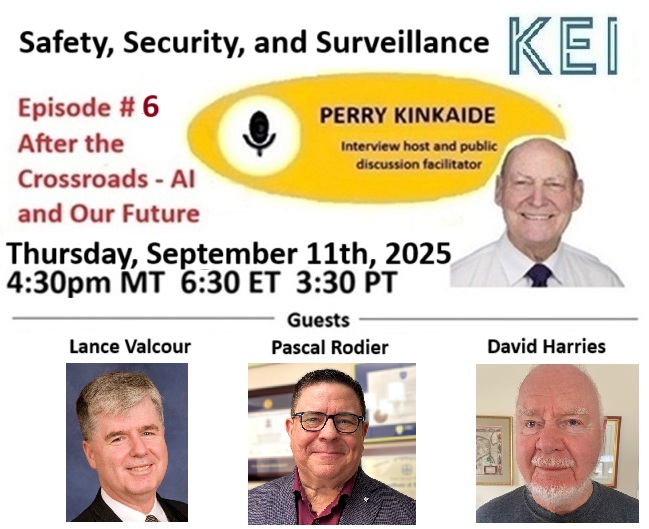

AI and OUR Future: Safety, Security, and Surveillance |

DIRECTORY | ||

|

Contributions: |

Action: Help sustain KEI's contributions |

Fact or Fiction?

Digital Currency is Near HERE Your call is NOT important to us HERE |

|

|

Editor - Perry Kinkaide |

Artificial intelligence is already reshaping safety — predicting wildfires, mapping floods, reducing crime, and strengthening emergency response. Its benefits are extraordinary, but so are the risks: biased policing, wrongful arrests, and surveillance systems that erode privacy. Yet these early applications are only the beginning. As AI evolves, we may anticipate systems that act independently of human judgment, merge with bioscience through biometric surveillance and genomic profiling, and expand into “supersensing” networks that track, predict, and respond in real time. |

||

|

The promise is greater protection and resilience; the peril is overreach, misuse, and loss of personal freedom. The challenge is clear: guiding AI to secure both public safety and individual liberty as it advances into every dimension of our lives. - Editor |

|||

|

AI and OUR Future: Safety, Security, and Surveillance - The Promise, Peril, and the Public Good Artificial intelligence has moved from the laboratory into the heart of society’s most fundamental systems of protection. Its impact is evident in policing, surveillance, emergency management, defense, and even the conduct of war. For every promise of improved safety, there is a parallel risk: bias, overreach, or the erosion of civil liberties. Consider the following exploration of these dimensions with evidence, examples, and the central dilemma of public versus personal interest. Policing: From Patrols to Predictions. Predictive policing uses historical data—crime reports, arrest records, geospatial information—to forecast where crimes are most likely. A RAND study (2023) found that predictive deployment reduced property crime in some pilot cities up to 30%, though violent crime rates were largely unaffected. The risk lies in feedback loops. A Stanford review (2022) showed predictive models disproportionately flagged minority neighborhoods already over-policed, perpetuating inequities rather than reducing crime. Calls for algorithmic audits and transparency are growing louder. Continued below

No need to register. Just Zoom in https://us02web.zoom.us/j/84258596166?pw.. Lance Valcour - retired from the Ottawa Police Service in 2010 after 33 years of service. He is an author, independent consultant, strategic adviser, marketing technologist, digital evangelist, coach, internationally recognized keynote speaker and facilitator on various issues including mental health, public safety information management, interoperability and business development. Lance is the author of If They Only Knew: A Cop’s Journey with Addiction and Mental Health and is currently writing his next book, tentatively titled What’s Next. Lance was invested as an Officer of the Order of Merit of the Police Forces by the Governor General of Canada in 2010 and received the Queen’s Diamond Jubilee Medal in 2012. In May 2019, Lance received the 2019 Ontario Women in Law Enforcement’s Presidential Award, one of the few men to receive this prestigious award. David Harries - is a Canadian now based in Kingston who has lived in 20 countries and paid one or more working visits to another 93. He has experience in the public and private and ngo sectors as a senior military officer, as a consultant in personal and corporate security, and as a senior advisor and university professor in heavy engineering, humanitarian aid, post conflict/post disaster response and recovery, civil-military relations, and executive professional development. He is a past-Chair, Canadian Pugwash Group, Foresight lead at Ideaconnector.net, a Principal of the Security and Sustainability Guide, Fellow of the World Academy of Arts & Science, and a rugby coach. Pascal Rodier - brings more than 35 years of leadership in public safety and emergency management. He began his career as a paramedic in Vancouver, later serving in leadership roles with Ambulance New Brunswick, and today is the Provincial Director of Emergency Preparedness for a Canadian health authority. Pascal has led responses to major events, from the 2010 Winter Olympics to the New Brunswick floods and the COVID-19 pandemic. A Certified Emergency Manager and Instructor in Canada’s Incident Command System, he has been nationally recognized with the Order of St. John and multiple service medals.” Continued from above Facial recognition adds another layer. AI accuracy has improved dramatically, with error rates below 1% in controlled settings. Yet real-world use shows racial and gender disparities, leading to wrongful arrests in the U.S. The ACLU and other groups argue for moratoria until safeguards are in place. Surveillance: Society Under Watch. Global surveillance capacity is staggering. By 2024, there were more than 1 billion networked cameras worldwide, many integrated with AI. In China, “Safe City” programs combine camera feeds, financial transactions, and phone records, reportedly reducing petty crime and speeding case resolution by 40%. In liberal democracies, resistance is higher. European courts have struck down some mass-surveillance projects, citing privacy protections under the GDPR. Still, law enforcement pressures for AI surveillance grow after terrorist attacks and public safety crises. Emergency Management: Predicting and Responding to Disasters. AI offers undeniable promise in disaster response. Machine learning models can synthesize satellite imagery, social media reports, and weather data to pinpoint risks.

These systems exemplify AI at its best—augmenting human capacity to save lives. Yet critics warn against over-reliance: data gaps, false positives, or cyberattacks could cripple systems when they are most needed. Defense: AI as the New Arms Race. Defense ministries are the most aggressive AI adopters. The U.S. Department of Defense spent over $3 billion in 2024 on AI, spanning logistics, decision-support, cyber-defense, and weapons development. NATO allies and China are racing ahead in parallel. Autonomous drones illustrate both promise and peril. Equipped with AI vision, they can loiter, surveil, and strike without direct human control. Ukraine’s battlefield deployment of AI-enhanced targeting has demonstrated the strategic advantage of software-guided warfare. But the dangers are profound. Imagine two AI-controlled missile defense systems interpreting a radar anomaly as an attack—triggering escalation in minutes, without time for human judgment. Analysts call these “flash wars,” accidents born of speed. The U.N. has debated bans on lethal autonomous weapons, but no consensus exists. The Ethical Dilemma: Public vs. Personal Interest. At the core of AI in safety lies a moral calculus: When do collective interests justify suspending individual rights?

The principle of proportionality is key: interventions must be transparent, time-bound, and limited to the scale of the threat. Without guardrails, governments risk normalizing emergency powers into everyday governance. Anticipating the Future. Looking ahead, AI will likely expand in three ways:

Citizens face a paradox: they demand safety but fear the tools designed to provide it. Balancing these demands will require new institutions and public engagement to ensure AI strengthens, rather than undermines, democratic values. AI is not just another tool in the security arsenal. It is transforming the very architecture of protection—who is protected, how risks are assessed, and what rights are preserved or suspended in the process. The challenge is to prevent the pursuit of safety from becoming the justification for surveillance states or autonomous wars. AI can help us build resilience against crime, disasters, and aggression—but only if society insists that these systems remain accountable to human judgment and human values.

|