|

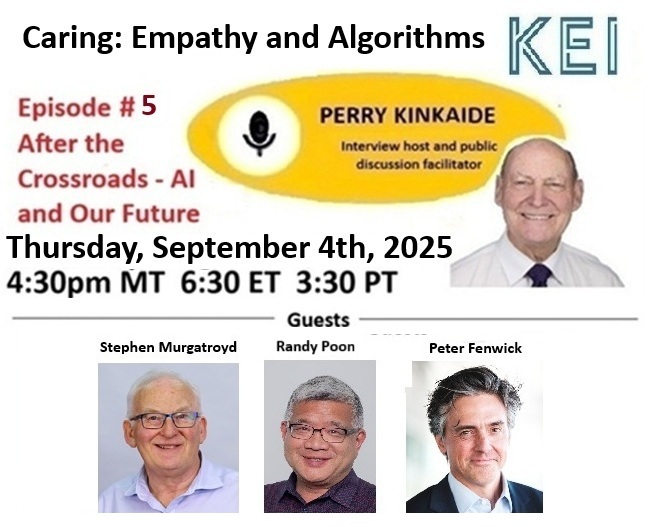

AI and OUR Future - Caring, Empathy, and Algorithms |

DIRECTORY | ||

|

Contributions: AI and OUR Future: Media in the Machine Age HERE |

Action: Help sustain KEI's contributions |

Fact or Fiction?

AI Is Humanity’s Most Influential Invention |

|

|

Editor - Perry Kinkaide |

For centuries, clients were captive to the exclusive knowledge of the professional. Today, with education, the internet, and now AI, knowledge is no longer exclusive. Authority is compromised increasing the value of caring, compassion, and conscience — empathy in action, judgment in context, presence in practice. As the relevance of caring increases so do the risks. |

||

|

Caring has always carried questions of dosage, dignity, and dependence. To much empathy can - as we have recently learned, compliment the urge to suicide. Also in the interest of preserving dignity practitioners must preserve boundaries, balancing independence with intimacy, trust with tenderness. Enter AI. It is already disrupting the professions and while it may soon surpass us with superintelligence — but can it also succeed in supercaring? Can algorithms convey empathy in just the right cadence, ethically, under every condition, personalized for every client? Consider also, caring is never one-way. Clients often wish to care back — with gratitude, gifts, or gestures of support, friendship, love, dependence. Here lies a delicate dilemma: when does kindness cross the line, when does reciprocity risk eroding respect? Boundaries are the guardrails that preserve both dignity and trust. As AI intrudes with intensity: Will it act as an aide or an alternative — not just with superintelligence but with supercaring? - Editor |

|||

AI in the Caring Professions: Partner, Disruptor, or Captor?For decades, the professions of health, education, counseling, and faith-based care have been defined by exclusive rights of practice—legal authority granted by governments and delegated to self-regulating associations. These safeguards were designed to ensure competence, uphold ethical standards, and preserve public trust.

Yet as Daniel and Richard Susskind predicted in The Future of the Professions, these guardrails are giving way. The commoditization of knowledge, the empowerment of clients through online information, and the rapid advance of artificial intelligence (AI) have made disruption inevitable.

From Wholeness to Fragmentation. AI-powered systems promise personalization, precision, and efficiency, but they often achieve these gains by breaking down the human subject into diagnoses, data points, and behavioral predictions.

This is what some have called prediction mistaken for presence—the illusion that knowing about someone equals truly knowing them. Continued below

No need to register. Just Zoom in https://us02web.zoom.us/j/84258596166?pw..Stephen Murgatroyd - worked extensively in online and distance education, both at The Open University UK (1973-1985) and Athabasca University (1986-1998, 2003-2005). He has also consulted on flexible and innovative learning around the world. He was awarded an Honorary Doctorate in 2000 by Athabasca University for his pioneering work in online learning and in 2025 was awarded the King Charles III Coronation Medal for his services to higher education in Canada. He has written extensively on issues of quality assurance and quality in education and has conducted QA reviews for agencies in Alberta, Ontario, Maritimes and the UAE. Randy Poon - Chief Story Officer and Founder of the Purpose and Performance Project, a consulting and coaching collaborative that helps leaders and organizations align purpose with impact. He works across business, nonprofit, and community sectors to cultivate human-centered leadership, strengthen resilience, and foster cultures of meaning and performance. With a PhD in Organizational Leadership, Randy previously served as Associate Professor and Head of the Business Program at Ambrose University, and spent 18 years with the Government of Canada, concluding as Director General of Strategic Policy and Analysis at Canadian Heritage. His expertise spans narrative formation, mental fitness, and organizational development—equipping leaders and teams to weave purpose into daily practice. Beyond his professional roles, Randy serves as Board Chair for two faith-based charities, reflecting his ongoing commitment to faith and community. His focus on purpose, compassion, and wholeness informs both his personal life and his exploration of how technology—especially AI—can serve human connection and societal good. Peter Fenwick - a Calgary- and Ottawa-based strategist, educator, and national leader in healthcare innovation, corporate growth, and leadership development. He leads Growth Catalyst at Mount Royal University, where he helps purpose-driven companies scale, and has held executive roles across both the public and private sectors—including as Vice President of Strategy at Green Shield Canada and Senior Provincial Director at Alberta Health Services. With a background spanning engineering, business, and systems thinking, Peter brings a human-centered lens to the future of AI in caring professions—asking not only how we design systems that care, but why, and what we risk when compassion itself is coded into algorithms. Continued from above The Caring Professions Under Pressure. AI applications now perform core tasks once reserved for human professionals:

While these tools expand access and efficiency, they risk hollowing out the human dimension. Philosopher-educator Rudolf Steiner warned that true care must engage the whole human—body, soul, and spirit. AI, however, delivers a procedural facsimile of empathy, often meeting formal requirements while missing moral and relational depth. Dependency and the Stockholm Parallel. Like hostages bonding with captors, clients may develop loyalty to the very systems that limit them. AI’s constant availability, tailored feedback, and “always-on” reassurance can create technology dependency, making it harder to challenge the system even when errors or harm occur. Systemic Shifts: Privatization and Global Competition. AI also blurs the line between public and private services:

This raises key questions:

Public Trust, Consumer Protection, and Regulation. With 80% of global digital ad revenue coming from hyper-targeted campaigns and exposure to 6,000–10,000 ads daily, clients are vulnerable to over-promising, under-delivering, and manipulation. The same profiling that sells products can nudge medical decisions, educational pathways, and therapy uptake. Consumer protection in the AI era may require:

Training: A Stress Test. If AI can replicate large portions of a practitioner’s role, professional training must evolve. Future curricula should emphasize the uniquely human competencies AI cannot replicate:

Without this shift, the professions risk becoming transactional and fragmented—ironically, less caring in an era of “personalized” services. A Choice Before the Window Closes. Superintelligent AI, forecasted by some to arrive within a decade, will not merely assist decisions—it will make them. Without clear boundaries, society could face a superintelligent/supercaring system that models every preference, anticipates every fear, and yet never truly understands. The illusion is that more data means more truth. The reality is that to be known is not to be measured; to be cared for is not to be optimized. As AI permeates the caring professions, the central question is whether it will remain a partner in human development—or become a subtle captor.

|